-

Notifications

You must be signed in to change notification settings - Fork 155

test(all): switch to use GitHub strategy matrix and fix flaky tests #828

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

… (and uuid is trimed off)

| @@ -57,7 +57,7 @@ export const createStackWithLambdaFunction = (params: StackWithLambdaFunctionOpt | |||

| }; | |||

|

|

|||

| export const generateUniqueName = (name_prefix: string, uuid: string, runtime: string, testName: string): string => | |||

| `${name_prefix}-${runtime}-${testName}-${uuid}`.substring(0, 64); | |||

| `${name_prefix}-${runtime}-${uuid.substring(0,5)}-${testName}`.substring(0, 64); | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

When test name is really long, the uuid is truncated on some resources. This results in name clashing when the same test cases are run at the same time.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Already looks good and passing!

As only note I'd recommend removing concurrently from the package-lock.json. Since we are no longer using it we can avoid having to maintain future updates for it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the PR! I left some comments/questions but already looking good :-)

| strategy: | ||

| matrix: | ||

| version: [12, 14] | ||

| package: [logger, metrics, tracing] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Outside of the scope of this PR, but we should rename the tracer folder to "tracer" for consistency. Created an issue:

#829

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| export const ONE_MINUTE = 60 * 1000; | ||

| export const TEST_CASE_TIMEOUT = ONE_MINUTE; | ||

| export const SETUP_TIMEOUT = 5 * ONE_MINUTE; | ||

| export const TEARDOWN_TIMEOUT = 5 * ONE_MINUTE; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I like this syntax. More readable!

| @@ -14,11 +14,16 @@ import { | |||

| expectedCustomResponseValue, | |||

| expectedCustomErrorMessage, | |||

| } from '../e2e/constants'; | |||

| import { FunctionSegmentNotDefinedError } from './FunctionSegmentNotDefinedError'; | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Question: what do you mean here with segment not defined?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If X-Ray hasn't fully processed all segments, some of them may be missing.

In our case, we may try to get the Function (AWS::Lambda::Function) segment too early. I use this custom error to distinguish the type of error form others.

| // This flag may be set if the segment hasn't been fully processed | ||

| // The trace may have already appeared in the `getTraceSummaries` response | ||

| // but a segment may still be in_progress | ||

| in_progress?: boolean |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

By looking at the documentation, also the end_time key might be not defined, correct?

end_time – number that is the time the segment was closed. For example, 1480615200.090 or 1.480615200090E9. Specify either an end_time or in_progress.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| } | ||

| } | ||

|

|

||

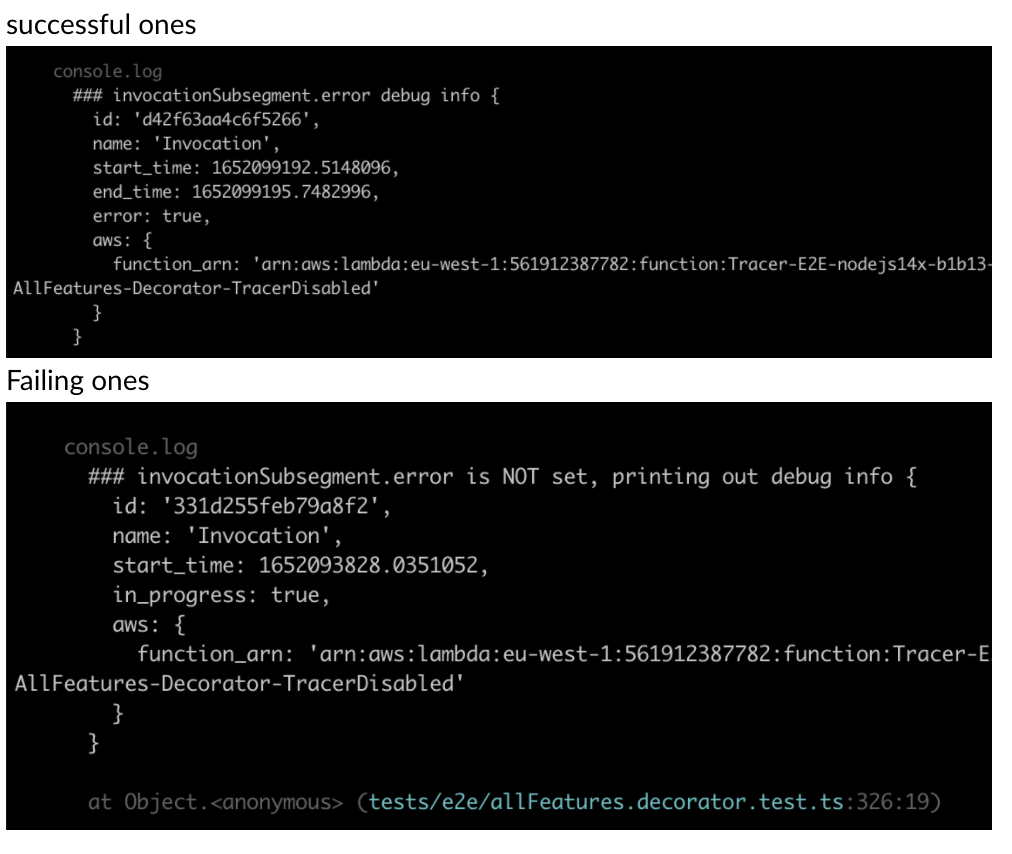

| retryFlag = retryFlag || (!!invocationSubsegment.in_progress); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Question: essentially here we are looking at the in_progress key. If it's set, we retry. Correct?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's right for this line. But there is a case that the whole segment isn't defined at all. That's handled by the lines above.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I see! thanks for clarifying

Description of your changes

The e2e tests have been flaky due to following reasons:

error). Causes: We removed the explicit wait on Tracer during refactoring. We now rely on polling until we receive the expected number of traces. However, not all subsegments are available in the traces yet.This PR addresses the issues by:

How to verify this change

You can trigger the e2e test workflow manually.

I've run this 5 times without any failure so far. > https://github.com/awslabs/aws-lambda-powertools-typescript/actions/runs/2295223762.

Related issues, RFCs

#825 <-- the log will be descriptive with Matrix strategy

PR status

Is this ready for review?: YES

Is it a breaking change?: NO

Checklist

Breaking change checklist

By submitting this pull request, I confirm that you can use, modify, copy, and redistribute this contribution, under the terms of your choice.